Final Project: Programming ML Applications

For this project, I used the Kaggle dataset Large Purchases by the State of California to attempt to classify categorical and free-text procurement data to a four-tier taxonomy. This dataset was similar to client data I had managed at Sievo, and free-text data was particularly tricky to heuristically classify.

Though the resulting model is a decision tree, my main goal for this project was to begin to develop a model for handling free-text data. I wanted to understand how free-text data could be cleansed, manipulated, and organized to be used for final models such as decision trees. Therefore, the project has several interim steps before producing the decision tree.

I hypothesized that treating the item name + description fields as a single “document”, then using a tf-idf vectorizer to weight term frequencies, would yield data well suited to clustering applications.

I first employed the Natural Language Toolkit to tokenize, stem, and then de-tokenize the the text. Stemming was particularly important to create an accurate tf-idf vectorizer to appropriately weight the key words of the free text; this project relies on NLTK’s Snowball Stemmer. I then used scikit-learn’s tf-idf vectorizer to find normalized frequencies for 43,000 terms.

43,000 terms was still too many for the clustering algorithm, so I performed latent semantic analysis through a truncated singular value decomposition to reduce the dimensionality of the cleansed text. Through tuning, 500 components produced the best results.

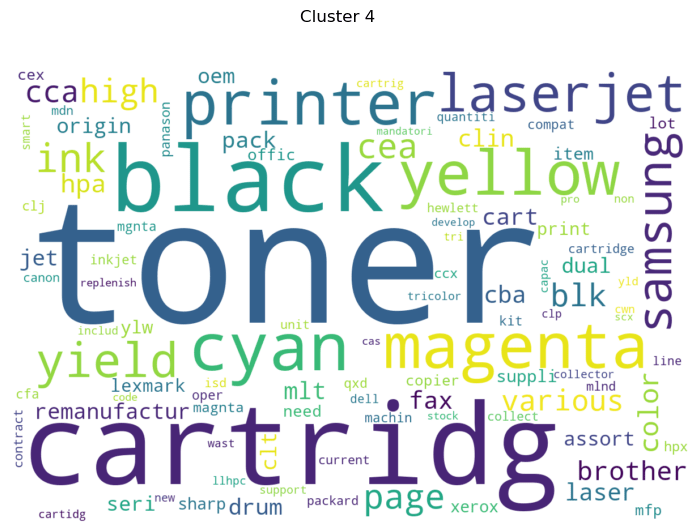

I then tuned a k-means clustering algorithm to further reduce the dimensionality of the free text, inputting the 500 components and ending with 350 clusters of components. This modified field then stood in the decision tree classifier instead of the free text fields.

Clusters were “named” according to the top three words in terms of frequency, to provide more human readability to a complex series of text processing steps. I’ve always appreciated a decision tree’s ability to turn complex machine learning into a product non-technical coworkers and clients can readily understand.

Finally, I tuned a decision tree classifier on the clusters as well as acquisition type, sub-acquisition type, and department name variables. I created a control model without text clusters, a model using the default tree attributes, and conducted a grid search to optimize tree parameters. Notably, the default tree accuracy did not meaningfully improve after a grid search for optimal parameters, indicating that classification improvements need to stem from outside the tree.

Technology

Python, scikit-learn, NLTK

Time Spent

40 hours

Algorithms

tf-idf, SVD, k-means clustering, decision tree

Date Completed

November 2023

Check out the code for this project

Read the accompanying paper for this project